A few months ago I bought an Oculus Rift DK2. Although these are designed for VR gaming, they’re actually pretty reasonable stereo displays. They have several desirable features, particularly that the OLED display is pulsed stroboscopically each frame to reduce motion blur. However, this also means that each pixel is updated at the same time, unlike on most LCD panels, meaning they can be used for timing sensitive applications. As of a recent update they are also supported by Psychtoolbox, which we use to run the majority of experiments in the lab. Lastly, they’re reasonably cheap, at about £300.

In starting to set up an experiment using the goggles I thought to check what their effective pixel resolution was in degrees of visual angle. Because the screens are a fixed distance from the wearer’s eye, I (foolishly) assumed that this would be a widely available value. Quite a few people simply took the monocular resolution (1080 x 1200) and divided this by the nominal field of view (110° vertically), producing an estimate of about 10.9 pixels per degree. As it turns out, this is pretty much bang on, but that wasn’t necessarily the case, because the lenses produce increasing levels of geometric distortion (bowing) at more eccentric locations. This might have the effect of concentrating more pixels in the centre of the display, increasing the number of pixels per degree.

Anyway, I decided it was worth verifying these figures myself. Taking a cue from methods we use to calibrate mirror stereoscopes, here’s what I did…

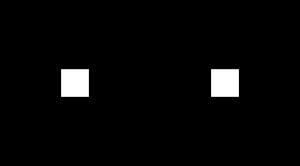

First I created two calibration images, consisting of a black background, and either one central square, or two lateralised squares. All the squares were 200 pixels wide (though this isn’t crucial), and the one with two squares was generated at the native resolution of the Oculus Rift (2160×1200). Here’s how the first one looks:

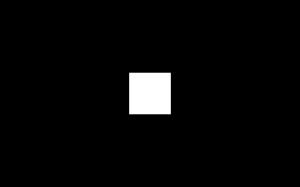

And here’s how the other one, with only one square looked:

These images were created with a few lines of Matlab code:

ORw = 2160; % full width of the oculus rift in pixels ORh = 1200; % height of the oculus rift in pixels CSw = 1440; % height of other computer's display in pixels CSh = 900; % width of other computer's display in pixels ORs = 200; % width of the squares shown on the rift CSs = 200; % width of the square shown on the computer's display a = zeros(ORh,ORw); a((1+ORh/2-ORs/2):(ORh/2+ORs/2),(1+ORw/4-ORs/2):(ORw/4+ORs/2)) = 1; a((1+ORh/2-ORs/2):(ORh/2+ORs/2),(1+3*ORw/4-ORs/2):(3*ORw/4+ORs/2)) = 1; imwrite(a,'ORimage.jpg','jpeg') a = zeros(CSh,CSw); a((1+CSh/2-CSs/2):(CSh/2+CSs/2),(1+CSw/2-CSs/2):(CSw/2+CSs/2)) = 1; imwrite(a,'CSimage.jpg','jpeg')

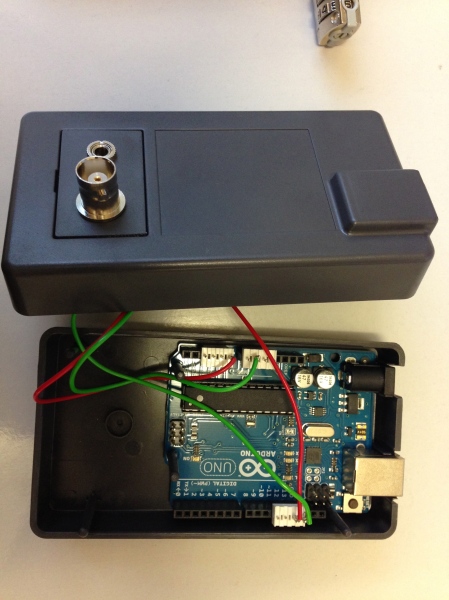

I then plugged in the Rift, and displayed the two-square image on it, and the one-square image on an iPad (though in principle this could be any screen, or even a printout). Viewed through the Rift, each square goes to only one eye, and the binocular percept is of a single central square.

Now comes the clever bit. The rationale behind this method is that we match the perceived size of a square shown on the Rift with one shown on the iPad. We do this by holding the goggles up to one eye, with the other eye looking at the iPad. It’s necessary to do this at a bit of an angle, so the square gets rotated to be a diamond, but we can rotate the iPad too to match the orientation. I found it pretty straightforward to get the sizes equal by moving the iPad forwards and backwards, and using the pinch-to-zoom operation.

Once the squares appeared equal in size I put the Rift down, but kept the iPad position fixed. I then measured two things: the distance from the iPad to my eye, and the width of the square on the iPad screen. The rest is just basic maths:

The iPad square was 7.5cm wide, and matched the Rift square at 24cm from the eye. At that distance an object 1cm wide subtends 2.4° of visual angle (because at 57cm, 1cm=1°). [Note, for the uninitiated, the idea of degrees of visual angle is that you imagine a circle that goes all the way around your head, parallel to your eyes. You can divide this circle into 360 degrees, and each individual degree will be about the size of a thumbnail held at arm’s length. The reason people use this unit is that it can be calculated for a display at any distance, allowing straightforward comparison of experimental conditions across labs.] That means the square is 2.4*7.5=18° wide. Because this is matched with the square on the Rift, the Rift square is also 18° wide. We know the square on the Rift is 200 pixels wide, so that means 18° = 200 pix, and 1° = 11 pixels. So, the original estimates were correct, and the pixel density at the centre of the screen is indeed 11 pixels/deg.

This is actually quite a low resolution, which isn’t surprising since the screen is close to the eye, individual pixels are easily visible, and the whole point of the Rift is to provide a wide field of view rather than a high central resolution. But it’s sufficient for some applications, and its small size makes it a much more portable stereo display than either a 3D monitor or a stereoscope. I’m also pleased I was able to independently verify other people’s resolution estimates, and have developed a neat method for checking the resolution of displays that aren’t as physically accessible as normal monitors.

Posted by bakerdh

Posted by bakerdh